Attention

The ShARC HPC cluster was decommissioned on the 30th of November 2023 at 17:00. It is no longer possible for users to access that cluster.

1. JupyterHub on ShARC: connecting, requesting resources and starting a session

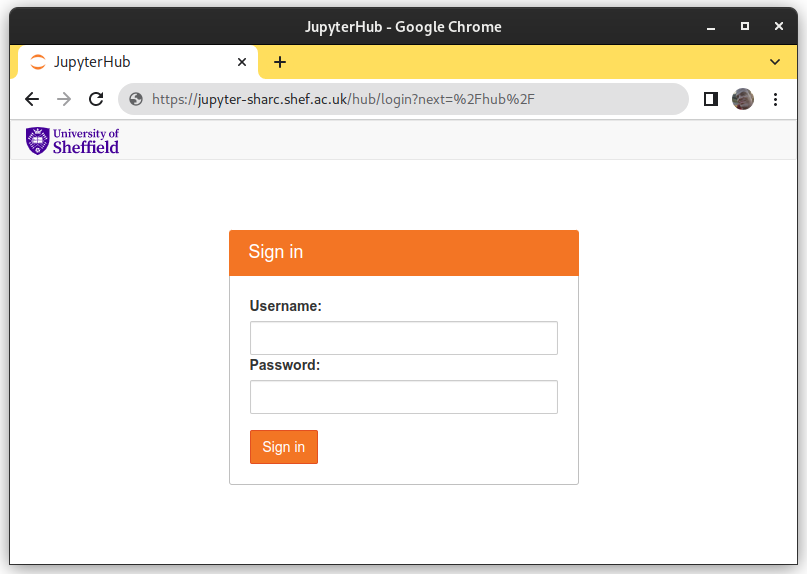

1.1. Connecting

From a web browser navigate to the following URL from a machine that is on-campus or has a VPN connection to the University:

https://jupyter-sharc.shef.ac.uk

When prompted, log in using your University username (e.g.

te1st, not the first part of your email address) and password.

You can only run one Jupyer Notebook Server on the cluster at a time but you can use your Notebook Server to run multiple Notebooks.

1.2. Requesting resources (Spawner options)

Before the Notebook server starts you may wish to request resources such as memory, multiple CPUs and GPUs using the Spawner options form. It presents you with a number of options:

1.2.1. Project

As a user on the cluster you are a member of one or more User Groups, which in turn give access to Projects. By submitting a job using a Project you can:

Use restricted-access worker nodes (possibly bought by your research group);

Have your job’s CPU and memory usage logged in a way that ensures all users of the cluster get fair usage.

Most users do not need to select a specific Project and should leave this setting as its default.

1.2.2. Job Queue

Selecting any lets the scheduler choose an appropriate Job Queue,

which is typically what you want.

1.2.3. Email address

The resource (CPU, memory and runtime) usage of your Jupyter session will be emailed to this address when it is stopped or aborts.

This information can by useful for diagnosing why the job scheduler has killed your Jupyter session should your session use more computation resources than you requested at the outset.

1.2.4. CPU cores

If you select >1 core then you must also select a Parallel Environment otherwise only 1 core will be granted.

1.2.5. Parallel Environment

smp: The number of CPU cores selected above are all allocated on one node.mpi: The number of CPU cores selected above are allocated on one or more nodes.

1.2.6. RAM per CPU core

A value in gigabytes.

1.2.7. GPUS per CPU core

Requires that Project is gpu (public GPUs) or another project that allow you access to GPUs e.g. rse.

1.2.8. Notebook session runtime

This is currently fixed at 4 hours.

1.3. Starting your Notebook session using the requested resources

After you’ve specified the resources you want for your job, click Spawn to try starting a Jupyter session on one (or more) worker nodes. This may take a minute.

Warning

If the cluster is busy or you have requested an incompatible or otherwise unsatisfiable set of resources from the job scheduler then this attempt to start a session will time out and you will return to the Spawner options form.

Once your session has started you should see the main main JupyterLab interface.